MiDaS

模型描述

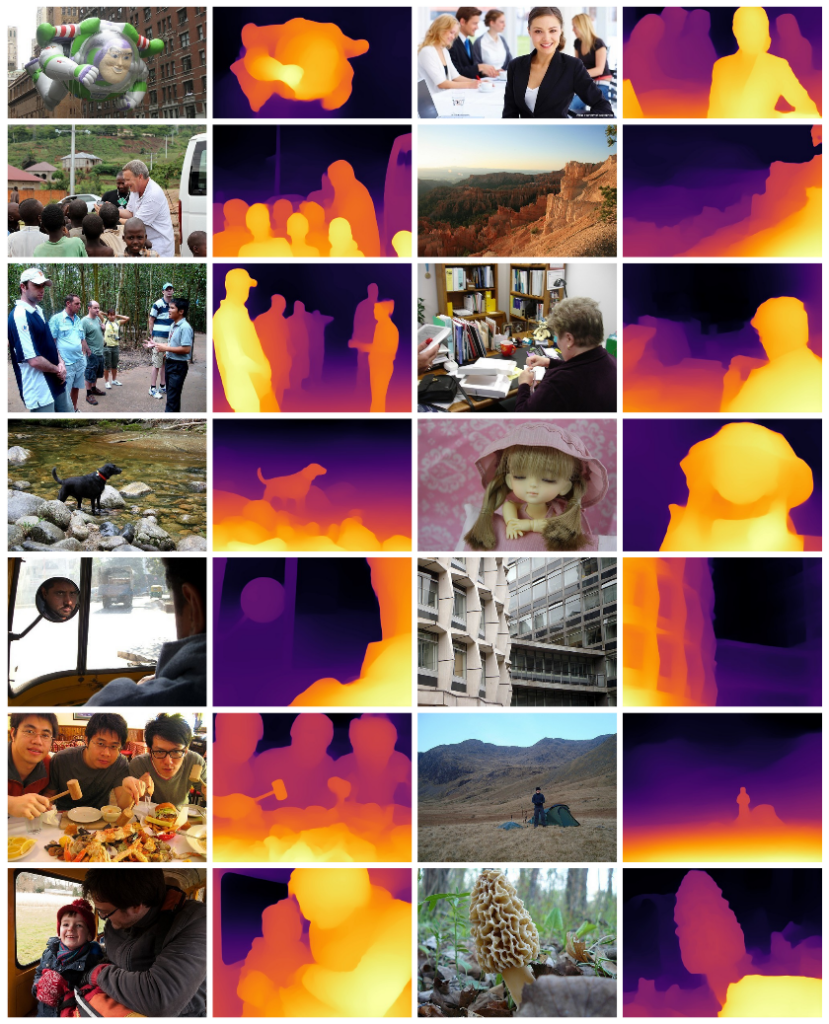

MiDaS 通过单张图像计算相对逆深度。该存储库提供了多种模型,涵盖了不同的用例,从小型、高速模型到提供最高精度的大型模型。这些模型已使用多目标优化在 10 个不同的数据集上进行训练,以确保在各种输入上都能实现高质量。

依赖项

MiDaS 依赖于 timm。使用以下命令安装:

pip install timm

示例用法

从 PyTorch 主页下载一张图片

import cv2

import torch

import urllib.request

import matplotlib.pyplot as plt

url, filename = ("https://github.com/pytorch/hub/raw/master/images/dog.jpg", "dog.jpg")

urllib.request.urlretrieve(url, filename)

加载模型(请参阅 https://github.com/intel-isl/MiDaS/#Accuracy 获取概述)

model_type = "DPT_Large" # MiDaS v3 - Large (highest accuracy, slowest inference speed)

#model_type = "DPT_Hybrid" # MiDaS v3 - Hybrid (medium accuracy, medium inference speed)

#model_type = "MiDaS_small" # MiDaS v2.1 - Small (lowest accuracy, highest inference speed)

midas = torch.hub.load("intel-isl/MiDaS", model_type)

如果可用,将模型移至 GPU

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

midas.to(device)

midas.eval()

加载变换以调整大模型或小模型的图像大小并进行归一化

midas_transforms = torch.hub.load("intel-isl/MiDaS", "transforms")

if model_type == "DPT_Large" or model_type == "DPT_Hybrid":

transform = midas_transforms.dpt_transform

else:

transform = midas_transforms.small_transform

加载图像并应用变换

img = cv2.imread(filename)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

input_batch = transform(img).to(device)

预测并调整到原始分辨率

with torch.no_grad():

prediction = midas(input_batch)

prediction = torch.nn.functional.interpolate(

prediction.unsqueeze(1),

size=img.shape[:2],

mode="bicubic",

align_corners=False,

).squeeze()

output = prediction.cpu().numpy()

显示结果

plt.imshow(output)

# plt.show()

参考文献

如果您使用我们的模型,请引用我们的论文

@article{Ranftl2020,

author = {Ren\'{e} Ranftl and Katrin Lasinger and David Hafner and Konrad Schindler and Vladlen Koltun},

title = {Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2020},

}

@article{Ranftl2021,

author = {Ren\'{e} Ranftl and Alexey Bochkovskiy and Vladlen Koltun},

title = {Vision Transformers for Dense Prediction},

journal = {ArXiv preprint},

year = {2021},

}